Executive Summary

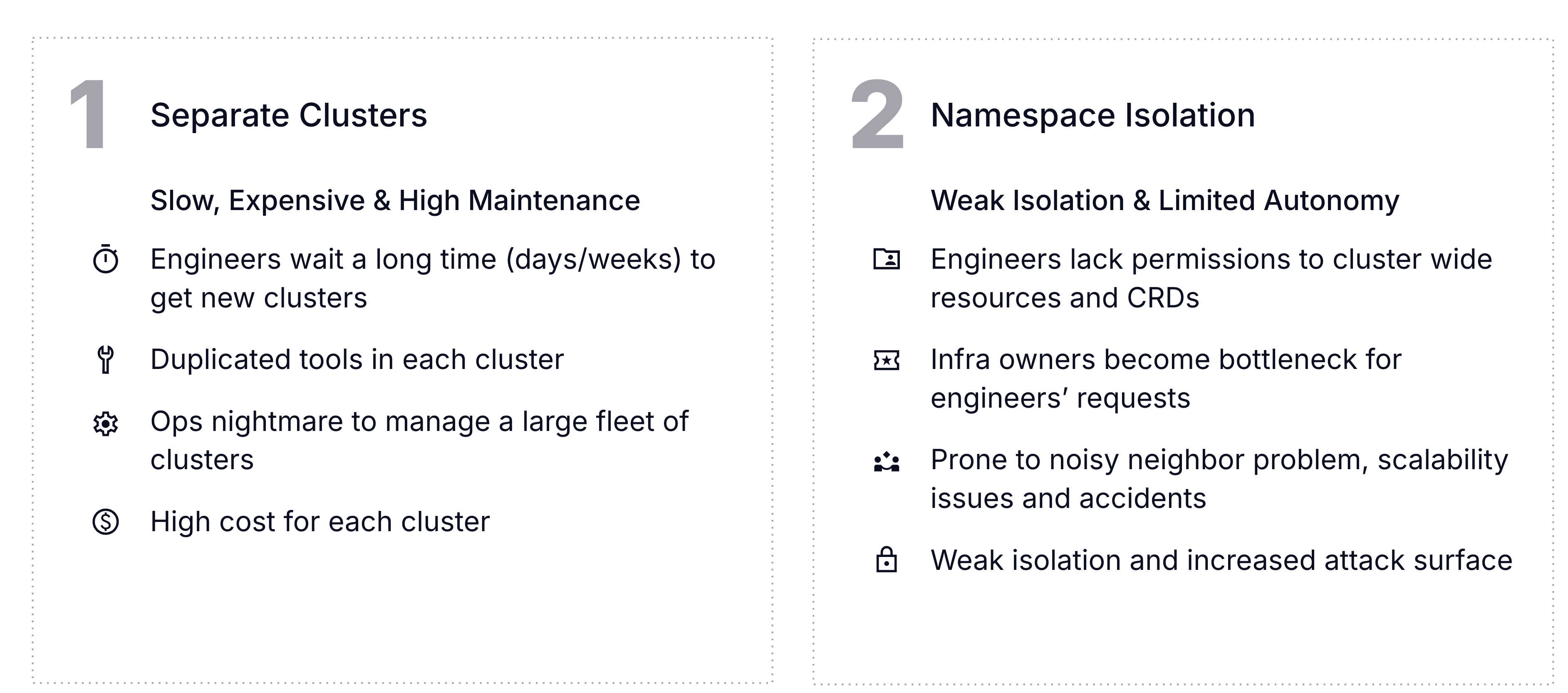

Organizations adopting Kubernetes at scale often face an operational paradox: clusters multiply to meet developer needs, but each additional cluster compounds complexity, cost, and operational burden. Traditional namespace-based multi-tenancy lacks the hard isolation required for regulated, GPU-intensive, or production-critical workloads, while managing dozens or hundreds of full clusters results in “cluster sprawl.”

vCluster addresses this challenge by introducing virtual Kubernetes clusters . Each virtual cluster provides a fully functional API server and control plane, running inside a shared host cluster but isolated from other tenants. This model preserves the autonomy and flexibility of dedicated clusters while consolidating infrastructure, reducing duplication, and enabling advanced features such as sleep mode for idle environments and flexible isolation models.

The result is a platform that balances developer experience, platform team efficiency, and business cost savings. With vCluster, teams can provision environments in minutes, reduce operational overhead, and scale Kubernetes adoption without scaling Kubernetes complexity.

Problem Statement

Kubernetes adoption has surged, but scaling it across enterprises introduces new problems. Application teams often request their own clusters to gain autonomy, but this leads to cluster sprawl, hundreds of EKS, GKE, or AKS clusters, each requiring upgrades, monitoring, and security hardening. The operational tax of managing these clusters grows unsustainable, especially when platform services (e.g., ingress, logging, DNS) are duplicated across every cluster.

Namespaces, often considered the first step toward multi-tenancy, fall short for many use cases. They lack hard boundaries: CRD version conflicts, policy overlap, and resource contention can cause one tenant’s configuration to impact another. In regulated industries or GPU workloads, this limited isolation is unacceptable. Moreover, developers frequently encounter environment drift, differences between dev, test, and production clusters—that delay releases and increase troubleshooting overhead.

Idle environments compound the issue. Development and test clusters often run nights and weekends at minimal utilization, burning infrastructure spend without delivering value. Meanwhile, extended support costs for outdated managed clusters add hidden expenses. Collectively, these challenges create friction between application teams, who want autonomy, and platform teams, who bear the operational burden.

Solution Overview

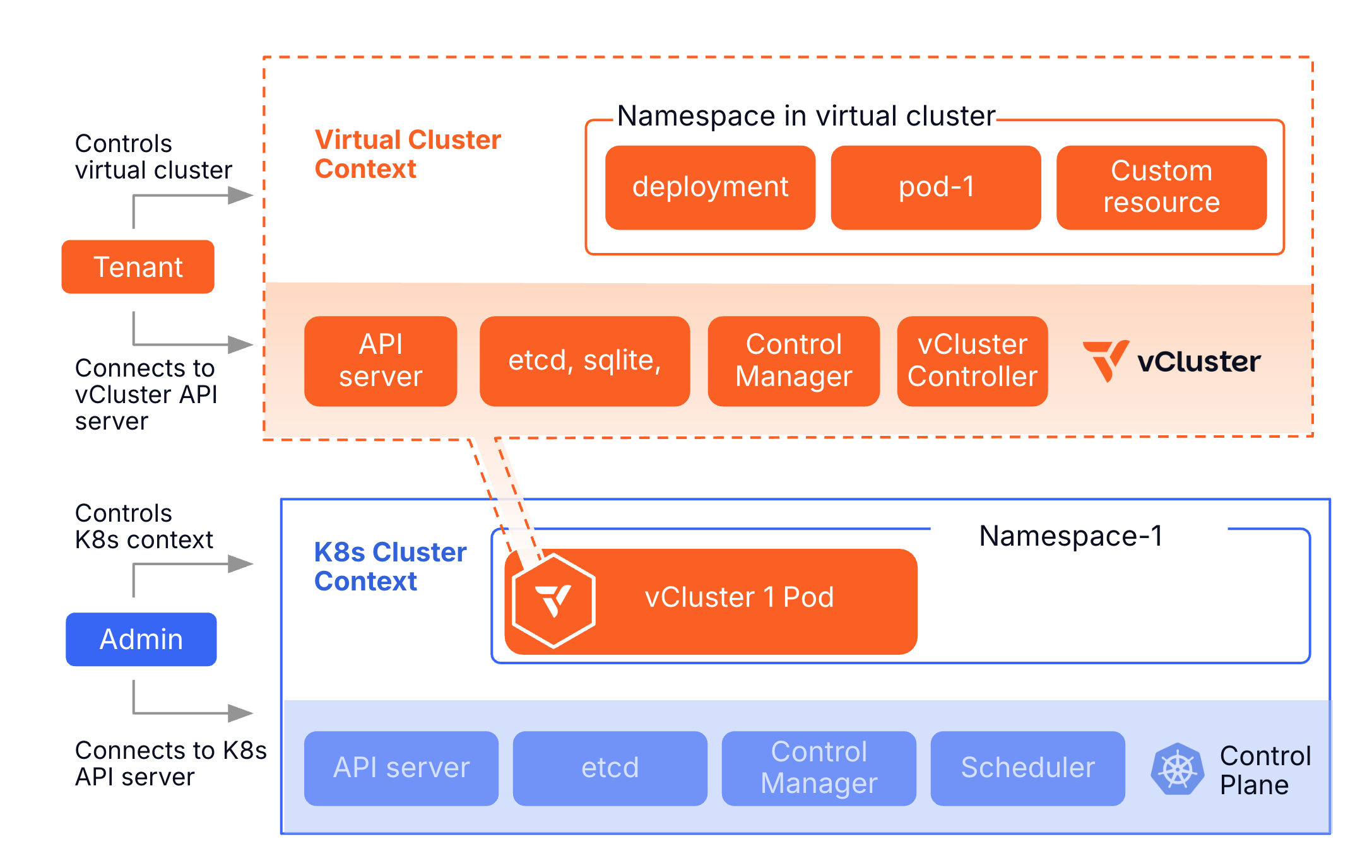

vCluster introduces a new Kubernetes abstraction called virtual clusters — an approach that bridges the gap between namespaces and full physical clusters.

Each virtual cluster runs its own Kubernetes API server and control plane inside a namespace of a host cluster, giving teams autonomy and isolation without duplicating infrastructure.

Developers enjoy the “feels like my own cluster” experience, while platform engineers centralize shared services, enforce governance, and streamline operations.

To support diverse teams, workloads, and infrastructure requirements, vCluster supports a spectrum of Tenancy options or Isolation Tiers, allowing organizations to choose the right balance of performance, cost, and control for each use case.

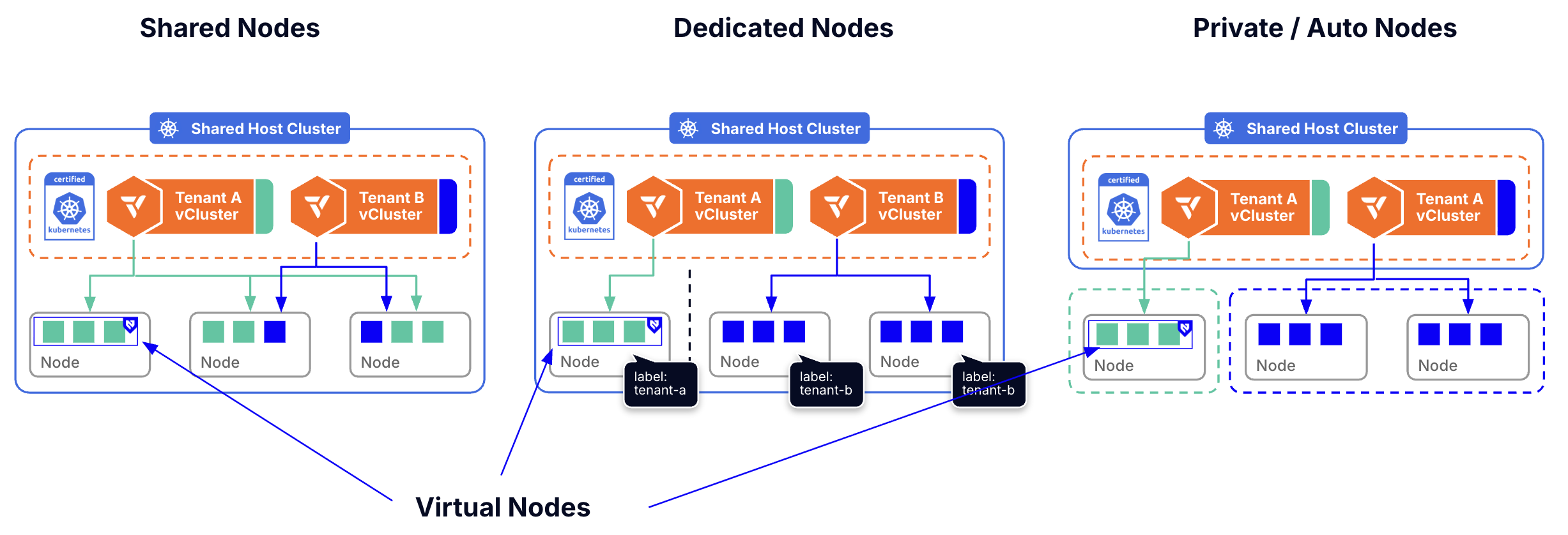

Tier 1 – Shared Isolation

- Powered by Shared Nodes, this tier is ideal for development, testing, and CI/CD environments where agility and utilization matter more than hard isolation.

- Multiple teams share host nodes, plugins, and system components for maximum density and cost efficiency.

Tier 2 – Runtime Isolation

- Backed by Virtual Nodes, this tier mitigates noisy-neighbor effects, isolates privileged workloads, and enhances multi-tenant stability without requiring dedicated hardware.

- Workloads run within secure, sandboxed virtual nodes to provide strong runtime separation while still leveraging shared infrastructure.

Tier 3 – Dedicated Isolation

- Enabled by Dedicated Nodes, this tier is best suited for production or latency-sensitive workloads that demand consistent performance.

- Teams are allocated exclusive physical or logical node groups, ensuring predictable performance and preventing cross-team contention.

Tier 4 – Data plane Isolation

- Enabled through Private Nodes, this tier delivers compliance-grade isolation and hybrid-cloud flexibility for regulated or mission-critical workloads.

- Each team operates fully independent data planes with its own CNI, CSI, and networking stack.

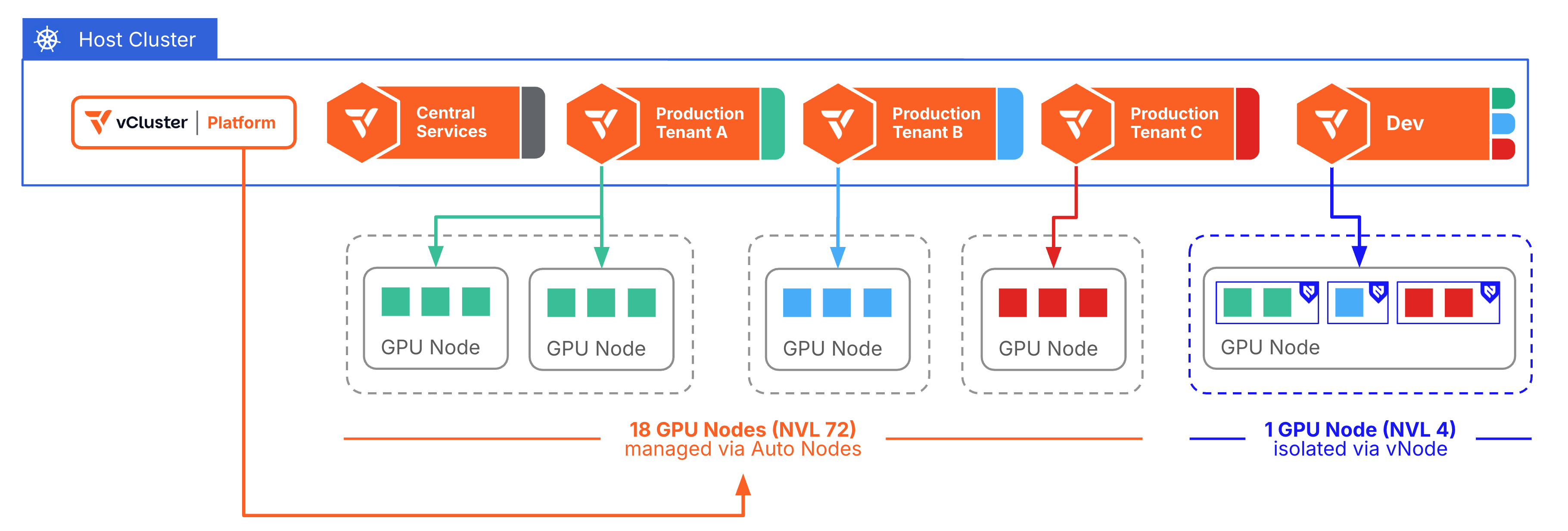

Tier 5 – Dynamic Isolation

- Powered by Auto Nodes, this tier delivers elastic compute, network-level isolation, and full lifecycle automation, seamlessly spanning cloud, on-prem, and bare-metal environments.

- vCluster automatically scale and isolate infrastructure based on workload demand using tenant-aware orchestration.

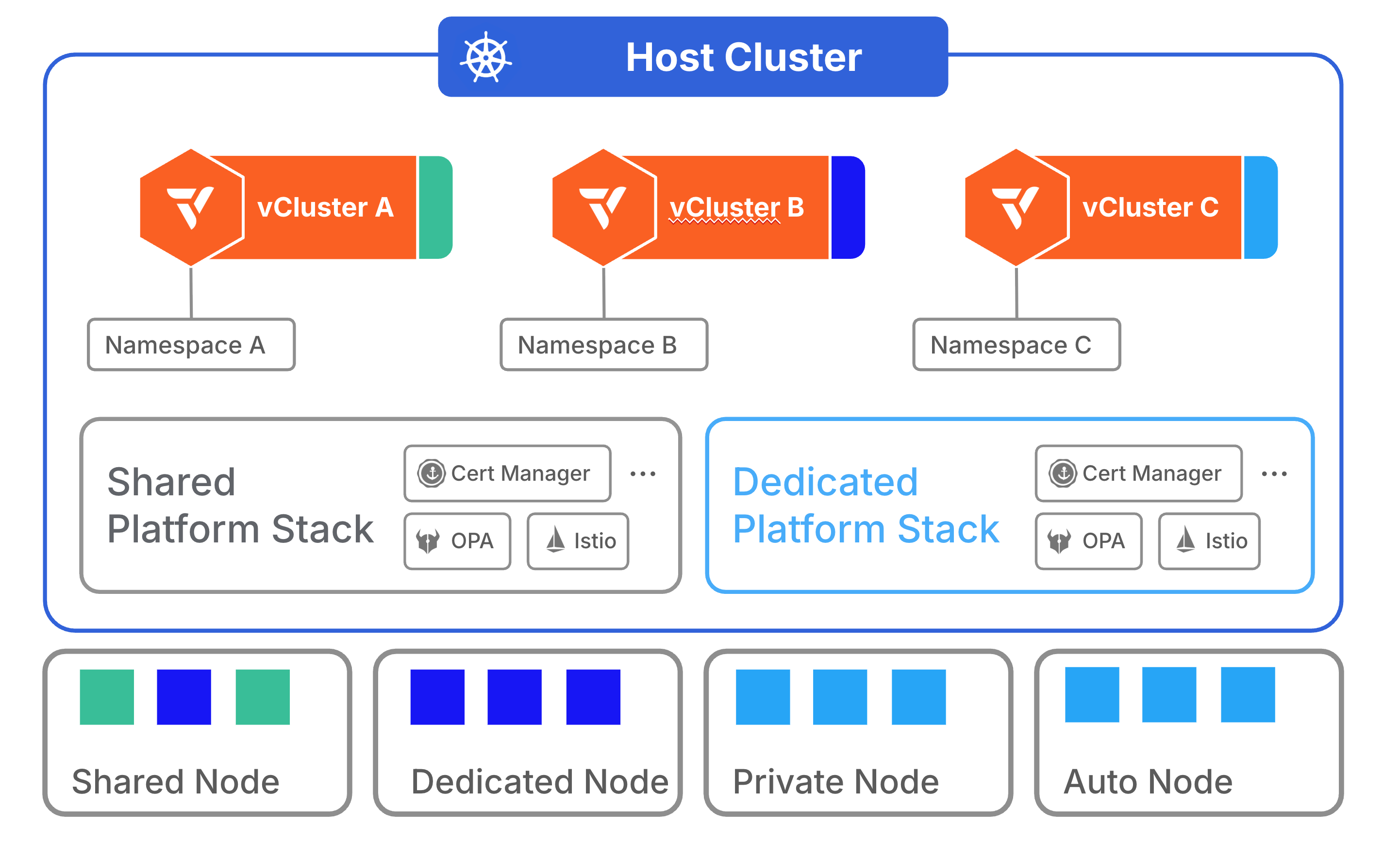

Beyond Isolation

These Isolation Modes give platform teams a configurable spectrum of Kubernetes operation, from lightweight shared development spaces to fully autonomous and self-scaling clusters, all orchestrated under the same vCluster platform. Across all tiers, vCluster extends beyond isolation to simplify how Kubernetes environments are managed, scaled, and maintained.

- Sleep Mode automatically suspends inactive clusters, reducing idle compute costs while keeping state intact for instant reactivation.

- Templated Provisioning enforces consistent configurations and security guardrails across dev, test, and production environments, ensuring every virtual cluster follows organizational standards.

- Finally, application-style upgrades, executed via GitOps or Helm, allow teams to upgrade virtual clusters independently of the host, completing in minutes instead of hours.

Together, these capabilities transform Kubernetes operations from manual cluster management to policy-driven automation, combining isolation, efficiency, and simplicity under one platform.

Architecture Details

The architecture has three layers:

- Host Cluster – Provides the underlying compute, networking, and storage. Shared platform services like ingress, monitoring, and policy engines live here.

- Virtual Cluster Control Plane – Each tenant gets an isolated API server and control plane, running inside a namespace. This separation ensures teams can install their own CRDs, manage RBAC, and operate independently without risking interference with the host or other tenants.

- Workload Placement – Workloads run on shared nodes, dedicated node groups, or private nodes depending on the isolation model chosen.

Isolation is configurable. In shared-node mode, vCluster workloads run as pods scheduled on the host’s nodes, with sync controllers mapping tenant resources to host equivalents. In dedicated-node mode, tenant workloads are confined to labeled subsets of host nodes, reducing cross-tenant interference. For maximum isolation, private-node mode attaches dedicated nodes directly to the vCluster, with its own CNI and CSI stack, ensuring strong compliance and workload separation.

Platform services such as ingress controllers, service meshes, and observability agents run centrally in the host cluster. Tenants consume these services without duplicating deployments, reducing overhead and standardizing compliance. Networking is carefully managed: tenants can request ingress resources, but enforcement policies and quotas ensure fair load balancer allocation. This model preserves Kubernetes best practices around RBAC scoping, observability, and multi-tenancy while giving teams autonomy.

This design keeps the platform team in control of shared infrastructure while giving developers freedom within their own clusters. Clear ownership boundaries are established: if an application fails inside a vCluster, the responsibility lies with the application team; if a shared service fails, it is owned by the platform team.

Use Cases

Development and Test Environments: Developers can spin up ephemeral virtual clusters that mirror production without incurring the cost of full clusters. Sleep mode suspends them when idle, dramatically reducing waste.

Blue-Green and Canary Deployments: Instead of creating separate managed clusters, teams can launch virtual clusters to validate new versions, then decommission them after rollout. This reduces operational overhead while improving release safety.

Multi-Tenant SaaS Platforms: SaaS vendors can provision per-customer virtual clusters for strong isolation. Customers perceive full autonomy, while providers benefit from shared infrastructure efficiency.

GPU and Regulated Workloads: Virtual and/or auto node modes enable GPU-aware scheduling and compliance-grade isolation. Platform teams can maximize GPU utilization while ensuring that tenant workloads cannot interfere with one another.

Platform Consolidation: By centralizing ingress, logging, DNS, and policy enforcement, vCluster eliminates duplication across dozens of clusters, reducing operational burden and enforcing consistent standards.

Performance and Benchmarking

vCluster improves performance and efficiency across several key dimensions.

Provisioning speed is a standout metric: virtual clusters typically come online in seconds, compared to 30+ minutes for full managed clusters. This accelerates developer onboarding and CI/CD pipelines.

Workload density and cost efficiency are achieved by consolidating platform services and leveraging sleep mode. Early adopters report cutting cluster counts by more than half while maintaining workload isolation, reducing both compute costs and managed service fees.

Noisy-neighbor mitigation is validated through sandboxed virtual nodes, which isolate tenant workloads without sacrificing performance consistency.

Upgrades, a major pain point in Kubernetes operations, are simplified. Instead of disruptive, hours-long managed cluster upgrades, virtual clusters are upgraded like applications, with rollbacks supported by GitOps workflows. Benchmarks show vCluster upgrades complete in under ten minutes with minimal tenant downtime.

Importantly, vCluster allows multiple virtual clusters to run on the same host cluster, each with its own independent Kubernetes version, though some features may be restricted depending on host capabilities. This unique capability not only improves resource utilization but also enables powerful testing and compatibility scenarios, such as validating workloads against future Kubernetes versions, without provisioning new infrastructure.

Conclusion

As enterprises scale Kubernetes adoption, they face a choice: either continue multiplying clusters at great cost and complexity, or adopt a model that balances autonomy, efficiency, and governance. vCluster represents that model. By delivering per-tenant virtual clusters with flexible isolation, shared platform services, and lifecycle features like sleep mode and decoupled upgrades, it reduces both the hard and soft costs of running Kubernetes at scale.

The platform benefits developers, platform teams, and business stakeholders alike: faster provisioning, simplified upgrades, clear ownership boundaries, and measurable cost reductions. From SaaS providers seeking secure multi-tenancy to enterprises optimizing GPU utilization, vCluster is an enabler of Kubernetes without compromise.

The path forward is clear: fewer clusters, more productivity, and multi-tenancy that finally works.

Learn more about the the different Tenancy Models that vCluster enables.

Deploy your first virtual cluster today.