Introducing vCluster Auto Nodes: Karpenter-Based Dynamic Autoscaling Anywhere

.png)

.png)

A few weeks ago, we introduced one of the biggest changes to vCluster since our first release in 2021: Private Nodes. It redefined how workloads run in virtual clusters by allowing users to attach dedicated worker nodes directly to the vCluster control plane without the need to have these nodes join a host cluster. They’re entirely private to the virtual cluster, ideal for fully isolated, tenant-scoped workloads.

With Private Nodes, vCluser users can now effectively run single-tenant clusters with hard isolation and no cross-tenant bleed-through. The reason we actually didn’t add this capability earlier is that we strive for efficient and lightweight tenancy models, and multi-tenancy was the most optimized way to run Kubernetes from a resource perspective. So if we were to add a feature like Private Nodes, we had to solve for this missing piece:

How do you scale these isolated environments without overprovisioning, wasting compute, or tying yourself to a specific cloud provider’s auto-scaled or serverless K8s approach?

Today, we’re answering that question with the launch of Auto Nodes.

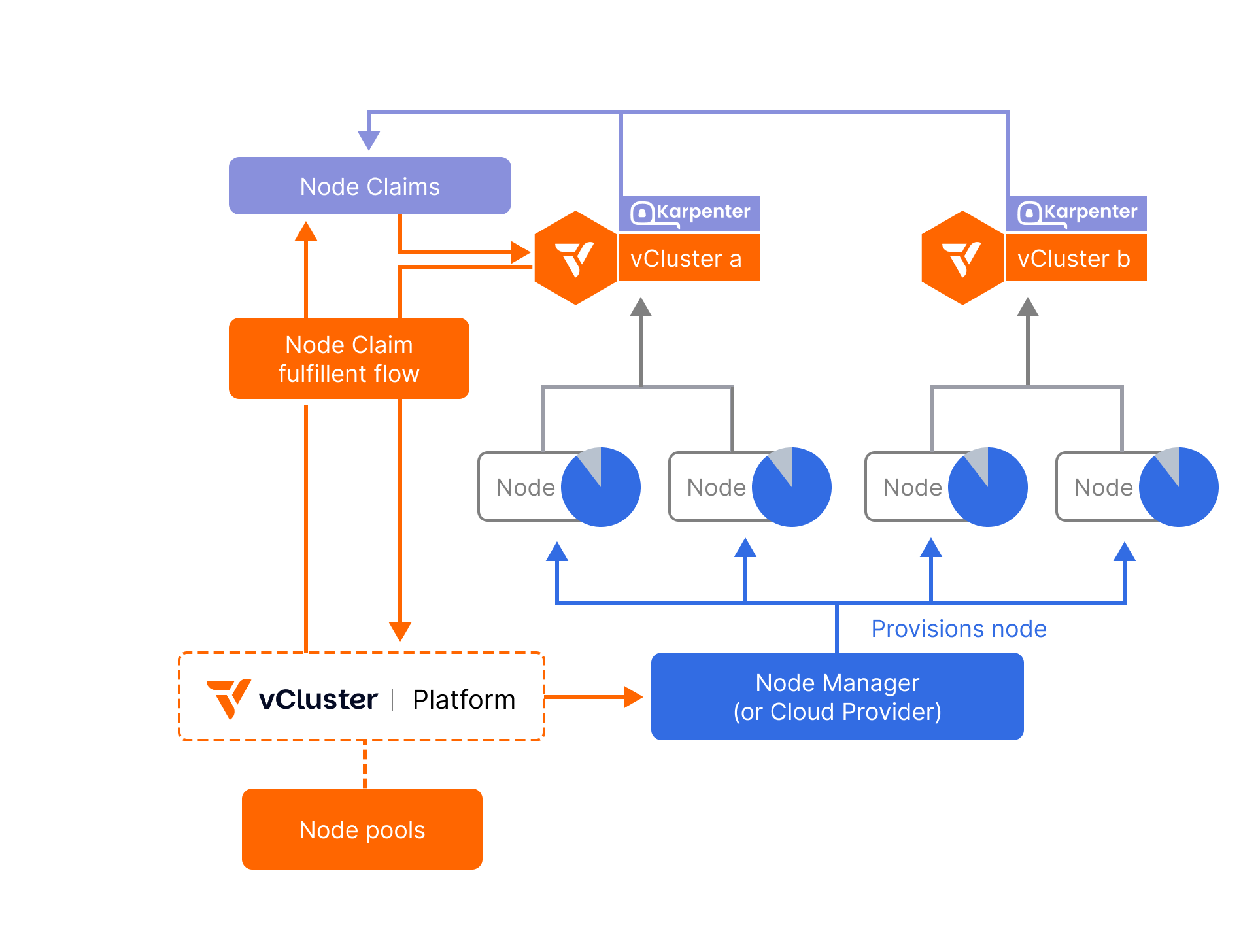

Auto Nodes brings dynamic autoscaling to Private Nodes, making it easy for virtual clusters to grow and shrink based on workload demand, with full isolation, across any infrastructure.

It’s powered by the same open-source engine behind EKS Auto Mode: Karpenter. But instead of just solving this for a single cloud provider such as AWS, Auto Nodes works anywhere and even across environments: You can combine nodes from public cloud, private cloud, and even bare metal environments into a Kubernetes cluster.

This is more than just scaling. It’s a completely dynamic, isolated, and cloud-agnostic Kubernetes directly built into every virtual cluster.

With Private Nodes, we solve for the strictest isolation requirements.

With Auto Nodes, we solve the need for elasticity and fluctuating resource demands.

Together, they unlock a new level of flexibility:

Whether you’re training AI models on-prem, bursting CI jobs to the cloud, or serving multi-tenant SaaS customers with strict compliance needs, Auto Nodes from vCluster lets you scale those environments dynamically, without losing control.

Auto Nodes embeds a Karpenter operator inside each vCluster, enabling each vCluster control plane to manage its own autoscaling logic independently.

Here’s how this can look in action:

It’s a complete autoscaling loop based on a declarative and highly customizable auto nodes config specified in the vcluster.yaml of each virtual cluster.

You can even define constraints like instance types, operating systems, or GPU requirements per environment:

# vcluster.yaml with Auto Nodes configured

privateNodes:

enabled: true

autoNodes:

dynamic:

- name: gcp-nodes

provider: gcp

requirements:

property: instance-type

operator: In

values: ["e2-standard-4", "e2-standard-8"]

- name: aws-nodes

provider: aws

requirements:

property: instance-type

operator: In

values: ["t3.medium", "t3.large"]

- name: private-cloud-openstack-nodes

provider: openstack

requirements:

property: os

value: ubuntu

The above config would allow you to:

Additionally, everything from the creation of network isolation, load balancers and a VPN that spans the control and nodes can be automatically set up with Auto Nodes.

Not every team is running Kubernetes on the public cloud, and even those that do often need more control than managed services provide.

That’s why Auto Nodes isn’t just a point solution for a single public cloud. It’s a solution that works anywhere and even across data centers and cloud boundaries.

Part of the initial scope for Auto Nodes is a set of easy to configure Node Providers that cover pretty much any environment you want to add to your Karpenter autoscaled vCluster control plane:

Wherever you want your infra to run, Auto Nodes will make sure you do it in the most efficient and automated way.

Kubernetes isn’t just a control plane, it’s an operating model. And in that model, isolation and elasticity are two of the hardest challenges to solve together.

Private Nodes and Auto Nodes combined help you build the most optimized Kubernetes clusters possible with capacity management on autopilot.

This means:

Every virtual cluster can now act as its own scalable unit, requesting exactly what it needs, scaling up and down based on real workload demands, and doing it all while ensuring strict tenant isolation.

Auto Nodes is now available in vCluster Platform v4.4 and vCluster v0.28. And this is just the beginning.

Coming October 1, we’ll release vCluster Standalone, a new deployment model that runs the vCluster control plane on VMs or bare metal, bypassing the need for a host Kubernetes cluster entirely. That means you can run vCluster as the host cluster, then vCluster as control plane in pods on top of this host cluster. It’s vCluster all the way for anyone who wants to go all-in on vCluster.

The announcement of Auto Nodes is one of the biggest releases since we launched vCluster in 2021.

With Auto Nodes, we’re turning virtual clusters into first-class, elastic clusters that can scale independently, isolate tenants securely, and help Kubernetes admins to run efficiently autoscaled clusters anywhere.

We have another big announcement planned called vCluster Standalone. If you want to learn more, check it out here: 👉 vcluster.com/launch

Thanks for building the future of Kubernetes with us.

— Lukas Gentele

CEO & Co-Founder, vCluster Labs

Deploy your first virtual cluster today.